The Best-Performing Open-Source Image Generation Model: Tencent Open-Sources HunYuanImage 3.0

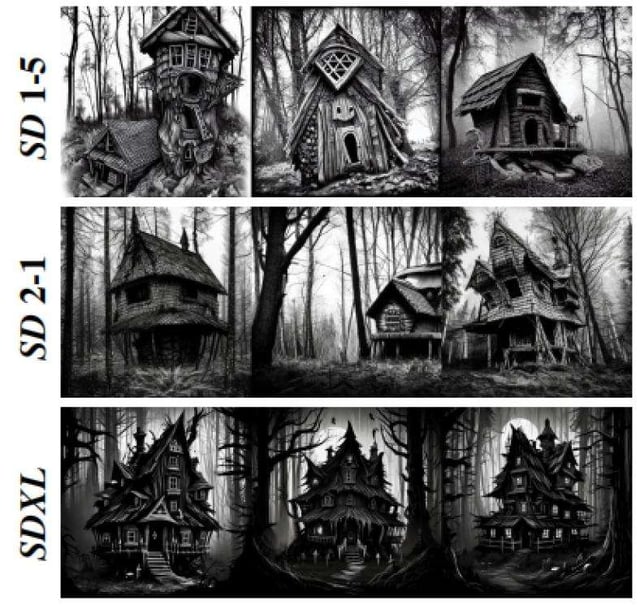

Tensor.Art will soon support online generation and has partnered with Tencent HunYuan for an official event. Stay tuned for exciting content and abundant prizes!September 28, 2025 — Tencent HunYuan today announced and open-sourced HunYuanImage 3.0, a native multimodal image generation model with 80B parameters. HunYuanImage 3.0 is the first open-source, industrial-grade native multimodal text-to-image model and currently the best-performing and largest open-source image generator, benchmarking against leading closed-source systems.Users can try HunYuanImage 3.0 on the desktop version of the Tencent HunYuan website (https://hunyuan.tencent.com/image). Tensor.Art (https://tensor.art) is soon to support online generation! The model will also roll out on Yuanbao. Model weights and accelerated builds are available on GitHub and Hugging Face; both enterprises and individual developers may download and use them free of charge.HunYuanImage 3.0 brings commonsense and knowledge-based reasoning, high-accuracy semantic understanding, and refined aesthetics that produce high-fidelity, photoreal images. It can parse thousand-character prompts and render long text inside images—delivering industry-leading generation quality.What “native multimodal” means“Native multimodal” refers to a technical architecture where a single model handles input and output across text, image, video, and audio, rather than wiring together multiple separate models for tasks like image understanding or generation. HunYuanImage 3.0 is the first open-source, industrial-grade text-to-image model built on this native multimodal foundation.In practice, this means HunYuanImage 3.0 not only “paints” like an image model, but also “thinks” like a language model with built-in commonsense. It’s like a painter with a brain—reasoning about layout, composition, and brushwork, and using world knowledge to infer plausible details.Example: A user can simply prompt, “Generate a four-panel educational comic explaining a total lunar eclipse,” and the model will autonomously create a coherent, panel-by-panel story—no frame-by-frame instructions required.Better semantics, better typography, better looksHunYuanImage 3.0 significantly improves semantic fidelity and aesthetic quality. It follows complex instructions precisely—including small text and long passages within images.Example: “You are a Xiaohongshu outfit blogger. Create a cover image with: 1) Full-body OOTD on the left; 2) On the right, a breakdown of items—dark brown jacket, black pleated mini skirt, brown boots, black handbag. Style: product photography, realistic, with mood; palette: autumn ‘Marron/MeLàde’ tones.” HunYuanImage 3.0 can accurately decompose the outfit on the left into itemized visuals on the right.For poster use-cases with heavy copy, HunYuanImage 3.0 neatly renders multi-region text (top, bottom, accents) while maintaining clear visual hierarchy and harmonious color and layout—e.g., a tomato product poster with dewy, lustrous, appetizing fruit and a premium photographic feel.It also excels at creative briefs—like a Mid-Autumn Festival concept featuring a moon, penguins, and mooncakes—with strong composition and storytelling.These capabilities meaningfully boost productivity for illustrators, designers, and visual creators. Comics that once took hours can now be drafted in minutes. Non-designers can produce richer, more engaging visual content. Researchers and developers—across industry and academia—can build applications or fine-tune derivatives on top of HunYuanImage 3.0.Why architecture matters nowIn text-to-image, both academia and industry are moving from traditional DiT to native multimodal architectures. While several open-source models exist, most are small research models with image quality far below industrial best-in-class.As a native multimodal open-source model, HunYuanImage 3.0 re-architects training to support multiple tasks and cross-task synergy. Built on HunYuan-A13B, it is trained with ~5B image-text pairs, video frames, interleaved text-image data, and ~6T tokens of text corpus in a joint multimodal-generation / vision-understanding / LLM setup. The result is strong semantic comprehension, robust long-text rendering, and LLM-grade world knowledge for reasoning.The current release exposes text-to-image. Image-to-image, image editing, and multi-turn interaction will follow.Track record & open-source commitmentTencent HunYuan has continuously advanced image generation, previously releasing the first open-source Chinese native DiT image model (HunYuan DiT), the native 2K model HunYuanImage 2.1, and the industry’s first industrial-grade real-time generator, HunYuanImage 2.0.HunYuan embraces open source—offering multiple sizes of LLMs, comprehensive image / video / 3D generation capabilities, and tooling/plugins that approach commercial-model performance. There are ~3,000 derivative image/video models in the ecosystem, and the HunYuan 3D series has 2.3M+ community downloads, making it one of the world’s most popular 3D open-source model families.LinksThe model will soon be available for online generation on Tensor.Art.Model Playground (desktop only): https://hunyuan.tencent.com/modelSquare/home/play?from=modelSquare&modelId=289Official Site: https://hunyuan.tencent.com/imageGitHub: https://github.com/Tencent-HunYuan/HunyuanImage-3.0Hugging Face: https://huggingface.co/tencent/HunYuanImage-3.0HunYuanImage 3.0 Prompt Handbook: https://docs.qq.com/doc/DUVVadmhCdG9qRXBUSample Generations & Prompts (English translations provided)A wide image taken with a phone of a glass whiteboard, in a room overlooking the Bay Bridge. The field of view shows a woman writing. The handwriting looks natural and a bit messy, and we see the photographer's reflection. The text reads: (left) "Transfer between Modalities: Suppose we directly model p(text, pixels, sound) [equation] with one big autoregressive transformer. Pros: image generation augmented with vast world knowledge next-level text rendering native in-context learning unified post-training stack Cons: varying bit-rate across modalities compute not adaptive" (Right) "Fixes: model compressed representations compose autoregressive prior with a powerful decoder" On the bottom right of the board, she draws a diagram: "tokens -> [transformer] -> [diffusion] -> pixels"Young Asian woman sitting cross-legged by a small campfire on a night beach, warm light glinting on her skin, shoulder-length wavy hair, oversized knit sweater slipped off one shoulder, holding a burning newspaper (half-scorched), high-contrast warm orange firelight under a deep-blue sky, film-grain texture, waist-up angle.Young East Asian woman with fair, delicate skin and an oval face. Clear, refined features; large, bright dark-brown eyes looking directly at the viewer; natural brows matching hair color; petite, straight nose; full lips with pale-pink gloss. Shiny brown hair center-parted into two neat braids tied with white ruffled fabric bows. Wispy bangs and strands blown lightly by wind. Wearing a white camisole with delicate white lace trim at the neckline and straps; bare shoulders, smooth skin. Key light from front-right creating highlights on cheeks, nose bridge, and collarbones. Background: expansive water in deep blue, distant land with dark-green trees, lavender sky suggesting dusk or dawn. Overall warm, gentle tonality.Neo-Chinese product photography: a light-green square tea box with elegant typography (“Eco-Tea”) and simple graphics in a Zen-inspired vignette—ground covered with fine-textured emerald moss, paired with a naturally shaped dead branch, accented by white jasmine blossoms. Soft gradient light-green background with blurred bamboo leaves in the top-right. Palette: fresh light greens; white flowers for highlights. Eye-level composition with the box appearing to hover lightly above the branch. Fine moss texture, natural wood grain, crisp flowers, soft lighting for a pure, tranquil mood.Zen-inspired luxury perfume still life: a square transparent bottle with warm golden liquid and black cap on a dark-brown pedestal. Deep blue gradient backdrop with a minimalist black branch casting sharp silhouettes and three white magnolias in bloom. Palette: deep blue, warm gold, pure white, rich black; eye-level, centered composition with soft light, premium finish, and Eastern floristry aesthetics.Advertising still life: a floating ketchup bottle surrounded by fresh tomatoes with splashes and flying juice. Dominant rich red scene; realistic, high-impact style; crystalline splash details and plump tomatoes. Center composition focusing on the bottle and explosion. Include label text: “WELL 威尔番茄沙司 净含量 300g”.Neo-Chinese, Zen-style premium tea still life: light-blue “Seek-Tea” box with refined Chinese typography and gold-foil motifs; sky-blue gaiwan (bowl, lid, saucer) with a smooth glaze; and a deep-blue vase. Two dark wood furniture pieces: taller stand on the left, shorter stand on the right with gold vertical metal trim. Red-orange gradient background (deep red top → light orange bottom) with soft bamboo shadows. Palette: red-orange, light blue, sky-blue, deep blue, dark wood, gold. Eye-level, symmetric layout; soft bamboo shadows, fine wood grain, warm ceramic sheen, refined metal textures; soft light for a high-end, Eastern ambience.Lifestyle product photo: a woman with voluminous curls wearing over-ear headphones and a loose pale-yellow sweatshirt, seated by a sun-lit window. Background: bright yellow wall and clear blue sky. Warm, comforting vibe; palette dominated by yellows with fresh blue accents. Side view, relaxed pose (elbow on desk, cheek in hand, gaze drifting). Details: curly texture, compact wireless headphones, translucent brown glass cup, minimalist devices, gentle natural-light shadows—cozy and serene.Illustration style: a step-by-step tutorial explaining how to make a latte. Must include a title and English step descriptions.